4/6

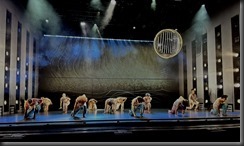

Today I am grateful to see "Churchill" at the Broadway Playhouse last night.

4/5

Today I am grateful to deliver a presentation at the Roanoke Valley .NET User Group last night.

4/4

Today I am grateful for monster movies.

4/3

Today I am grateful to deliver a presentation and participate in a Fireside Chat at the Illinois Institute of Technology

4/2

Today I am grateful to repair my bike tire yesterday

4/1

Today I am grateful for Easter brunch with Tim and Natale yesterday

3/31

Today I am grateful for the resurrection of Jesus Christ

3/30

Today I am grateful to those who are willing to share their knowledge with others.

3/29

Today I am grateful for a call from Suzanne yesterday.

3/28

Today I am grateful for

- to spend a few minutes with Jeff yesterday afternoon

- to attend the Chicago Java User Group last night

3/27

Today I am grateful to those who publicly praised the talks I delivered last week.

3/26

Today I am grateful for the men that my sons have become.

3/25

Today I am grateful for my new iPhone.

3/24

Today I am grateful:

- for coffee with Karen yesterday morning

- to see "On Your Feet" last night at the CIBC Theatre

3/23

Today I am grateful:

- for the hospitality of Ondrej and Desislava

- to stay up late last night playing board games with Gaines, Brian, and Ondrej

- to the organizers of the Michigan Technology Conference for an excellent event

3/22

Today I am grateful to deliver a keynote presentation this morning at the Michigan Technology Conference at UWM.

3/21

Today I am grateful for a speaker dinner last night in Pontiac.

3/20

Today I am grateful to attend AICamp last night

3/19

Today I am grateful for the start of spring.

3/18

Today I am grateful for 26 consecutive NCAA Tournament appearances.

3/17

Today I am grateful:

- to be at the Chicago River yesterday morning during the annual river dyeing

- to attend a Nowruz celebration last night

3/16

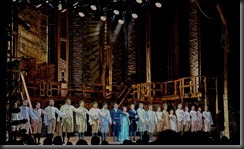

Today I am grateful to see "My Fair Lady" performed live at the Nederlander Theatre last night.

3/15

Today I am grateful:

- to attend the Enterprise GenAI for Leaders event yesterday

- for a visit from Nick

3/14

Today I am grateful for 1,000 subscribers to my #GCast channel.

3/13

Today I am grateful to speak at ElasticON yesterday in the Willis Tower.

3/12

Today I am grateful:

- to Matt Ruma for helping me with my Copilot Studio demo yesterday

- to speak about AI at an Elastic meetup yesterday

- for a drink at Cindy's Rooftop last night with a beautiful view of the city

3/11

Today I am grateful to see Rickie Lee Jones in concert last night.

3/10

Today I am grateful to see the Bobby Lewis Quintet at the Jazz Showcase last night.

3/9

Today I am grateful to attend a high school student field trip to the Microsoft office yesterday.

3/8

Today I am grateful for a birthday dinner with my kids last night.

3/6

Today I am grateful to see "Mrs. Doubtfire - the Musical" last night.

3/5

Today I am grateful that my espresso machine is now repaired.

3/4

Today I am grateful to see "Message in a Bottle" featuring the music of Sting last night.