Parsing information from a web page is not a trivial task. Fortunately, HTML has a defined structure and libraries exist to help us navigate that structure.

One such library for C# is HTML Agility Pack or HAP.

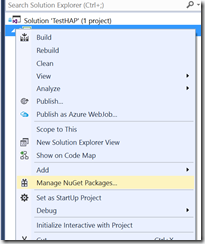

You can add this library to a project via NuGet. Simply right-click your project in the Visual Studio Solution Explorer, select "Manage NuGet Packages" (Fig. 1); then search for "HTML Agility Pack" and install the package (Fig. 2).

Once the package is installed, you can load your document into an HtmlAgilityPack.HtmlDocument and begin working with it.

There are 3 ways to load a web page into an HtmlDocument: from a file on disk; from a string of HTML, from a URL, and from whatever document is loaded in a browser.

Below are examples of each (taken from the HAP web site).

// From File var doc = new HtmlDocument(); doc.Load(filePath); // From String var doc = new HtmlDocument(); doc.LoadHtml(html); // From Web var url = "http://html-agility-pack.net/"; var web = new HtmlWeb(); var doc = web.Load(url); // From Browser var web1 = new HtmlWeb(); var doc1 = web1.LoadFromBrowser(url, o => { var webBrowser = (WebBrowser) o; // WAIT until the dynamic text is set return !string.IsNullOrEmpty(webBrowser.Document.GetElementById("uiDynamicText").InnerText); });

Listing 1

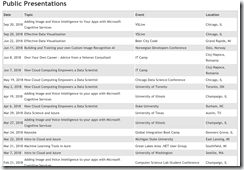

I am interested in this project because I have a web page that lists all my public presentations and I want to make this page data driven, so I won't have to update a text file every time I schedule a new presentation.

To populate a database with this information, I could either type in every presentation or grab it from my web page and parse out the relevant information in an HTML table. Since I have delivered nearly 500 presentations, re-typing each of these individually seemed like way too much work.

Here is a screenshot of my "Public Presentations" page:

and here is a partial listing of the source HTML for that page.

id="talklist">

style="height:15.0pt">

style="height:15.0pt;width:62pt" width="83">

Date

width="458">

Topic

width="311">

Event

width="64">

Location

style="height:15.0pt">

Sep 20, 2018

Adding Image and Voice Intelligence to Your Apps with Microsoft Cognitive Services

VSLive

Chicago, IL

style="height:15.0pt">

Sep 20, 2018

Effective Data Visualization

VSLive

Chicago, IL

style="height:15.0pt">

Jun 22, 2018

Effective Data Visualization

Beer City Code

Grand Rapids, MI

Listing 2

I used the following code to load the data into an HtmlDocument:

var url = "/Schedule.aspx"; Console.WriteLine("Getting data from {0}...", url); var web = new HtmlWeb(); var doc = web.Load(url);

Listing 3

The HtmlDocument has a DocumentNode property, which returns the root element of the HTML document as a node. Most of the time, I found myself working with nodes and collections of nodes. Each node has a SelectSingleNode and a SelectNodes method which returns a node and a collection of nodes, respectively. These take an XPATH argument, with which I was familiar from my days working with XML documents.

The following code retrieves a nodelist of all the row nodes within the "talklist" table, shown in Listing 2.

var documentNode = doc.DocumentNode; var tableNode = documentNode .SelectSingleNode("//table[@id='talklist']"); var rowsNodesList = tableNode.SelectNodes("tr");

Listing 4

Finally, because each node contains 4 nodes of cells, I can iterate through each node, find all the cells, and the innertext of each cell. For good measure, I stripped off any non-printing characters. Because the title row contains cells, instead of cells, I want to check for this before extracting information.

The code for this is in Listing 5.

var rowCount = 1; foreach (var row in rowsNodesList) { var cells = row.SelectNodes("td"); if (cells != null && cells.Count > 0) { var date = cells[0].InnerText; date = date.Replace("\r\n", "").Trim(); var topic = cells[1].InnerText; topic = topic.Replace("\r\n", "").Trim(); var eventName = cells[2].InnerText; eventName = eventName.Replace("\r\n", "").Trim(); var location = cells[3].InnerText; location = location.Replace("\r\n", "").Trim(); Console.WriteLine("Row: {0}", rowCount); Console.WriteLine("Date: {0}", date); Console.WriteLine("Topic: {0}", topic); Console.WriteLine("Event: {0}", eventName); Console.WriteLine("Location: {0}", location); Console.WriteLine("--------------------"); rowCount++; } }

Listing 5

Here is the full code listing for my console app that retrieves the text of each cell.

using HtmlAgilityPack; using System; namespace TestHAP { class Program { static void Main(string[] args) { var url = "/Schedule.aspx"; Console.WriteLine("Getting data from {0}...", url); var web = new HtmlWeb(); var doc = web.Load(url); var documentNode = doc.DocumentNode; var tableNode = documentNode .SelectSingleNode("//table[@id='talklist']"); var rowsNodesList = tableNode.SelectNodes("tr"); var rowCount = 1; foreach (var row in rowsNodesList) { var cells = row.SelectNodes("td"); if (cells != null && cells.Count > 0) { var date = cells[0].InnerText; date = date.Replace("\r\n", "").Trim(); var topic = cells[1].InnerText; topic = topic.Replace("\r\n", "").Trim(); var eventName = cells[2].InnerText; eventName = eventName.Replace("\r\n", "").Trim(); var location = cells[3].InnerText; location = location.Replace("\r\n", "").Trim(); Console.WriteLine("Row: {0}", rowCount); Console.WriteLine("Date: {0}", date); Console.WriteLine("Topic: {0}", topic); Console.WriteLine("Event: {0}", eventName); Console.WriteLine("Location: {0}", location); Console.WriteLine("--------------------"); rowCount++; } } Console.ReadLine(); } } }

Listing 6

You can download this solution from my GitHub repository.

Although I don’t intend to use it for this, HAP also supports modifying the HTML you select with node methods like AppendChild(), InsertAfter(), and RemoveChild().

This tool will help me to retrieve and parse the hundreds of rows of data from my web page and insert them into a database.