It's difficult enough for humans to recognize emotions in the faces of other humans. Can a computer accomplish this task? It can if we train it to and if we give it enough examples of different faces with different emotions.

When we supply data to a computer with the objective of training that computer to recognize patterns and predict new data, we call that Machine Learning. And Microsoft has done a lot of Machine Learning with a lot of faces and a lot of data and they are exposing the results for you to use.

As I discussed in a previous article, Microsoft Cognitive Services includes a set of APIs that allow your applications to take advantage of Machine Learning in order to analyze, image, sound, video, and language.

The Cognitive Services Emotions API looks at photographs of people and determines the emotion of each person in the photo. Supported emotions are anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise. Each emotion is assigned a score between 0 and 1 - higher numbers indicate a high confidence that this is the emotion expressed in the face. If a picture contains multiple faces, the emotion of each face is returned.

To get started, you will need an Azure account and a Cognitive Services Vision API key.

If you don't have an Azure account, you can get a free one at https://azure.microsoft.com/free/.

Once you have an Azure Account, follow the instructions in this article to generate a Cognitive Services Computer Vision key.

To use this API, you simply have to make a POST request to the following URL:

https://[location].api.cognitive.microsoft.com/vision/v1.0/recognize

where [location] is the Azure location where you created your API key (above).

The HTTP header of the request should include the following:

Ocp-Apim-Subscription-Key.

This is the Cognitive Services Computer Vision key you generated above.

Content-Type

This tells the service how you will send the image. The options are:

- application/json

- application/octet-stream

If the image is accessible via a public URL, set the Content-Type to application/json and send JSON in the body of the HTTP request in the following format

{"url":"imageurl"}

where imageurl is a public URL pointing to the image. For example, to generate a thumbnail of this picture of a happy face and a not happy face,

submit the following JSON:

{"url":"/content/binary/Open-Live-Writer/Using-the-Cognitive-Services-Emotion-API_14A56/TwoEmotions_2.jpg"}

If you plan to send the image itself to the web service, set the content type to "application/octet-stream" and submit the binary image in the body of the HTTP request.

A full request looks something like this:

The full request looks something like:

POST https://westus.api.cognitive.microsoft.com/emotion/v1.0/recognize HTTP/1.1

Content-Type: application/json

Host: westus.api.cognitive.microsoft.com

Content-Length: 62

Ocp-Apim-Subscription-Key: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

{ "url": "http://xxxx.com/xxxx.jpg" }

For example, passing a URL with a picture below of 3 attractive, smiling people

(found online at https://giard.smugmug.com/Tech-Community/SpartaHack-2016/i-4FPV9bf/0/X2/SpartaHack-068-X2.jpg)

returned the following data:

[

{

"faceRectangle": {

"height": 113,

"left": 285,

"top": 156,

"width": 113

},

"scores": {

"anger": 1.97831262E-09,

"contempt": 9.096525E-05,

"disgust": 3.86221245E-07,

"fear": 4.26409547E-10,

"happiness": 0.998336,

"neutral": 0.00156954059,

"sadness": 8.370223E-09,

"surprise": 3.06117772E-06

}

},

{

"faceRectangle": {

"height": 108,

"left": 831,

"top": 169,

"width": 108

},

"scores": {

"anger": 2.63808062E-07,

"contempt": 5.387114E-08,

"disgust": 1.3360991E-06,

"fear": 1.407629E-10,

"happiness": 0.9999967,

"neutral": 1.63170478E-06,

"sadness": 2.52861843E-09,

"surprise": 1.91028926E-09

}

},

{

"faceRectangle": {

"height": 100,

"left": 591,

"top": 168,

"width": 100

},

"scores": {

"anger": 3.24157673E-10,

"contempt": 4.90155344E-06,

"disgust": 6.54665473E-06,

"fear": 1.73284559E-06,

"happiness": 0.9999156,

"neutral": 6.42121E-05,

"sadness": 7.02297257E-06,

"surprise": 5.53670576E-09

}

}

]

A high value for the 3 happiness scores and the very low values for all the other scores suggest a very high degree of confidence that each person in this photo happy. is

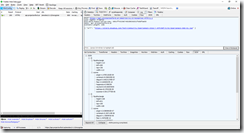

Here is the request in the popular HTTP analysis tool Fiddler [http://www.telerik.com/fiddler]:

Request

Below is a C# code snippet making a request to this service to analyze the emotions of the people in an online photograph. You can download the full application at https://github.com/DavidGiard/CognitiveSvcsDemos.

string emotionApiKey = "XXXXXXXXXXXXXXXXXXXXXXX";

var client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", emotionApiKey);

string uri = "https://westus.api.cognitive.microsoft.com/emotion/v1.0/recognize";

HttpResponseMessage response;

var json = "{'url': '" + imageUrl + "'}";

byte[] byteData = Encoding.UTF8.GetBytes(json);

using (var content = new ByteArrayContent(byteData))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/json");

response = await client.PostAsync(uri, content);

}if (response.IsSuccessStatusCode)

{

var data = await response.Content.ReadAsStringAsync();

}

You can find the full documentation – including an in-browser testing tool - for this API here.

Sending requests to the Cognitive Services Emotion API makes it simple to analyze the emotions of people in a photograph.